Statistical learning theory

Eventually including structural risk minimisation, risk bounds, hopefully-uniform convergence rates, VC-dimension, generalisation-and-stability framings etc

July 6, 2016 — August 16, 2016

Another placeholder far from my own background.

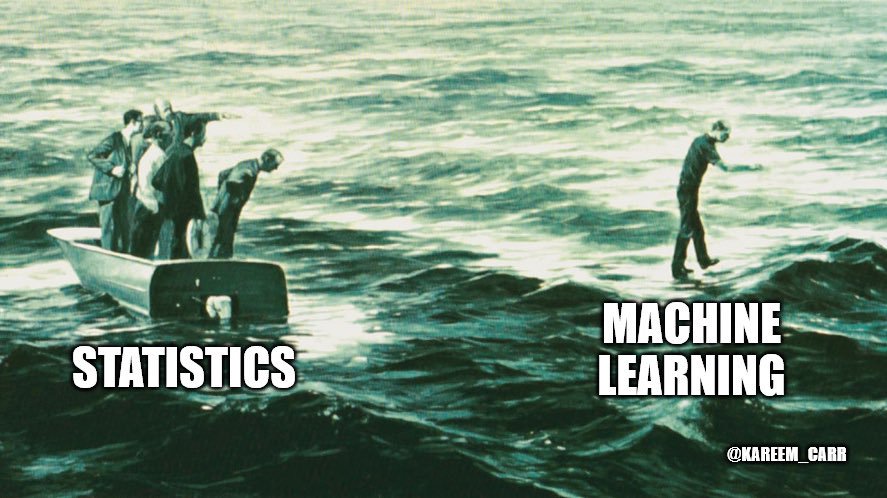

Given some amount of noisy data, how complex a model can I learn before I’m going to be failing to generalise to new data? If I can answer this question a priori, I can fit a complex model with some messy hyperparameter and choose that hyperparameter without doing boring cross-validation. When I did statistics we talked about this in terms of regularisation and [model selection(./model_selection.html). However, the ML people started thinking about this form the other end.

Depending on your training you might think about this in terms of Rademacher complexity, Gaussian complexity, Vapnik-Chernovenkis dimension, bias-variance tradeoffs… Modern results seem to appeal to concentration inequalities. Also apparently stability of estimators gets you somewhere?

AFAICT, nice statistical learning results apply to particular classes of models; e.g. SVMs and kernel regressions have built-in sample complexity results under the right loss function, but the ground gets rapidly shakier as you generalise.

1 VC dimension

2 Rademacher complexity

3 Stability-based

A# Stability-based Also apparently stability of estimators gets you somewhere?

4 PAC-learning

Probably approximately correct, you mean?

5 Non-I.I.D data

6 Stein’s method

See Stein’s method

7 Incoming

Percy Liang’s course notes give a rapid overview: CS229T/STAT231: Statistical Learning Theory (Winter 2014).

See also function approximation, and or model selection for the statisticians’ approach, which is more about working out which model our data can support once you’ve fit it, frequently by appealing to asymptotic large-sample results. In learning theory it seem one always cares about finite sample bounds. Which is reasonable. and the attractiveness of getting them without, e.g. tedious and computationally expensive cross validation, bootstrapping is understandable.

I would like to understand the relationship between the different kinds of convergence rate results that we get, and different learning theories.

I would like results that applied to dependent data.

(Golubev and Nussbaum 1990; Hasminskii and Ibragimov 1990) give minimax convergence rates in Sobolev class regression.

This looks like fun (David, Moran, and Yehudayoff 2016):

We begin with the setting of multiclass categorization (zero/one loss). We prove that in this case learnability is equivalent to compression of logarithmic sample size, and that uniform convergence implies compression of constant size. We then consider Vapnik’s general learning setting: we show that in order to extend the compressibility-learnability equivalence to this case, it is necessary to consider an approximate variant of compression.