The network topology that more or less kicked off the current revolution in computer vision and thus the whole modern neural network craze.

Convolutional nets (convnets or CNNs to the suave) are well described elsewhere. I’m going to collect some choice morsels here. Classic signal processing baked in to neural networks.

There is a long story about how convolutions naturally encourage certain invariances and symmetries, although AFAICT it’s all somewhat hand-wavey.

Generally uses FIR filters plus some smudgy “pooling”.

Visualising

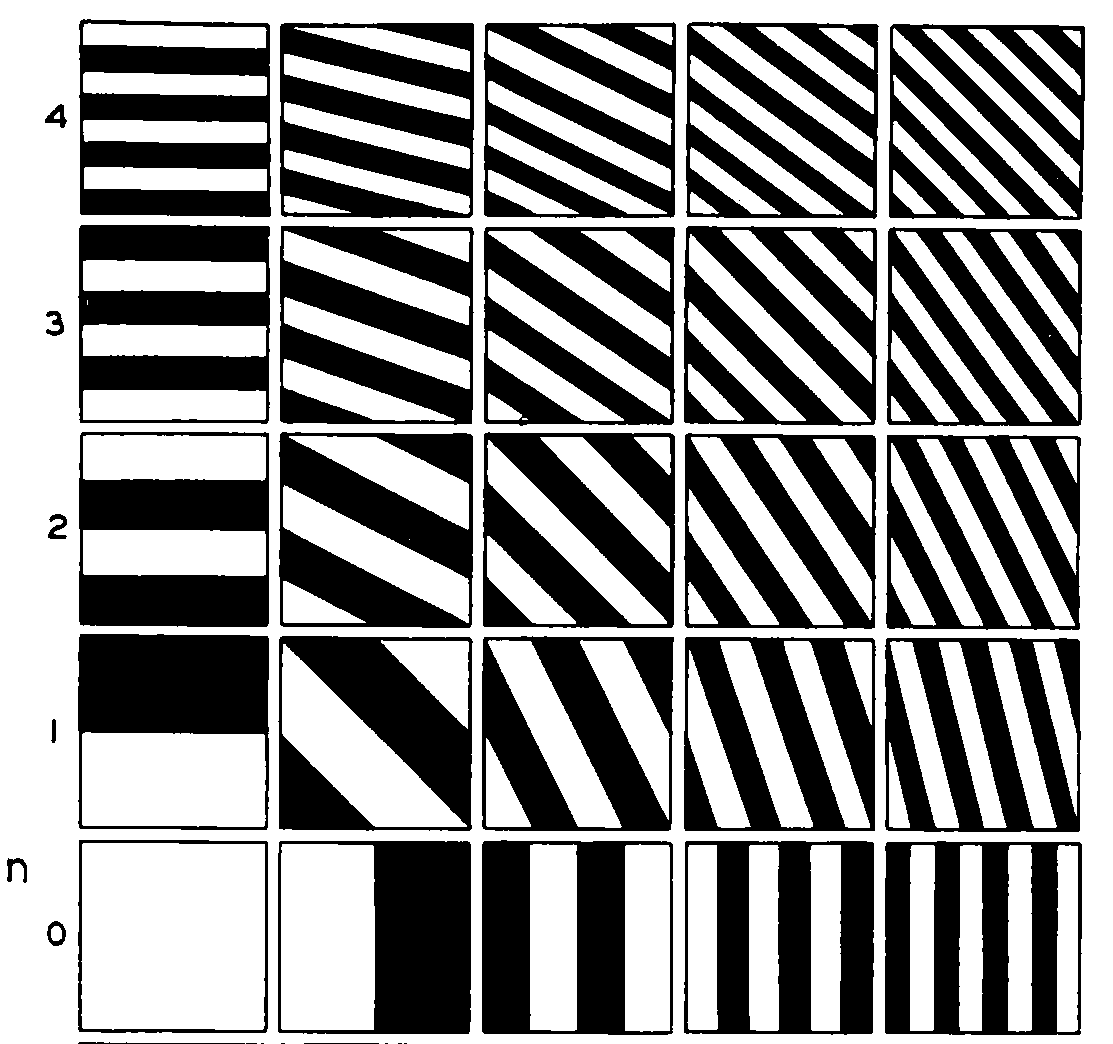

Here is a visualisations of convolutions: vdumoulin/conv_arithmetic

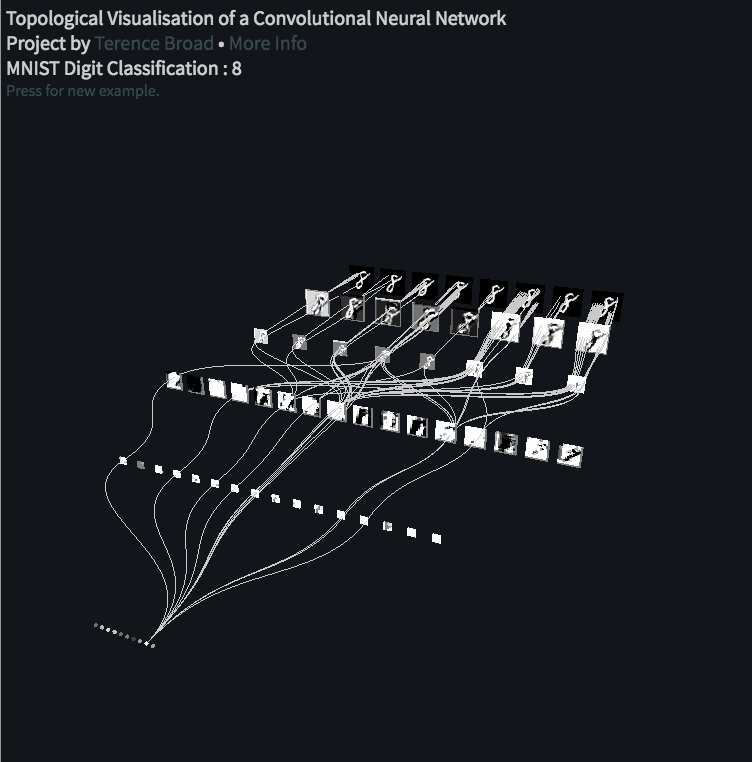

Visualising the actual activations of a convnet is an interesting data visualisation challenge, since intermediate activations often end up being high-rank tensors, but they have a lot of regularity that can be exploited to it feels like it should be feasible.

References

Defferrard, Bresson, and Vandergheynst. 2016.

“Convolutional Neural Networks on Graphs with Fast Localized Spectral Filtering.” In

Advances In Neural Information Processing Systems.

Gonzalez. 2018.

“Deep Convolutional Neural Networks [Lecture Notes].” IEEE Signal Processing Magazine.

Krizhevsky, Sutskever, and Hinton. 2012.

“Imagenet Classification with Deep Convolutional Neural Networks.” In

Advances in Neural Information Processing Systems.

Kulkarni, Whitney, Kohli, et al. 2015.

“Deep Convolutional Inverse Graphics Network.” arXiv:1503.03167 [Cs].

LeCun, Bengio, and Hinton. 2015.

“Deep Learning.” Nature.

Lee, Grosse, Ranganath, et al. 2009.

“Convolutional Deep Belief Networks for Scalable Unsupervised Learning of Hierarchical Representations.” In

Proceedings of the 26th Annual International Conference on Machine Learning. ICML ’09.

Mallat. 2016.

“Understanding Deep Convolutional Networks.” arXiv:1601.04920 [Cs, Stat].

Paul, and Venkatasubramanian. 2014.

“Why Does Deep Learning Work? - A Perspective from Group Theory.” arXiv:1412.6621 [Cs, Stat].

Ronneberger, Fischer, and Brox. 2015.

“U-Net: Convolutional Networks for Biomedical Image Segmentation.” Edited by Nassir Navab, Joachim Hornegger, William M. Wells, and Alejandro F. Frangi.

Medical Image Computing and Computer-Assisted Intervention – MICCAI 2015. Lecture Notes in Computer Science.

Shelhamer, Long, and Darrell. 2017.

“Fully Convolutional Networks for Semantic Segmentation.” IEEE Transactions on Pattern Analysis and Machine Intelligence.

Springenberg, Dosovitskiy, Brox, et al. 2014.

“Striving for Simplicity: The All Convolutional Net.” In

Proceedings of International Conference on Learning Representations (ICLR) 2015.

Wang, Xu, Xu, et al. 2019.

“Packing Convolutional Neural Networks in the Frequency Domain.” IEEE transactions on pattern analysis and machine intelligence.

Wiatowski, and Bölcskei. 2015.

“A Mathematical Theory of Deep Convolutional Neural Networks for Feature Extraction.” In

Proceedings of IEEE International Symposium on Information Theory.

Wiatowski, Grohs, and Bölcskei. 2018.

“Energy Propagation in Deep Convolutional Neural Networks.” IEEE Transactions on Information Theory.