Highly performative computing

Research cluster computing

March 9, 2018 — January 6, 2023

On getting things done on the Big Computer your supervisor is convinced will solve that Big Problem with Big Data because that was the phrasing used in the previous funding application.

I’m told the experience of HPC on campus is different for, e.g. physicists, who are working on a software stack co-evolved over decades with HPC clusters, and start from different preconceptions about what computers do. For us machine-learning types, though, the usual scenario is as follows (and I have been on-boarded 4 times to different clusters with similar pain points by now):

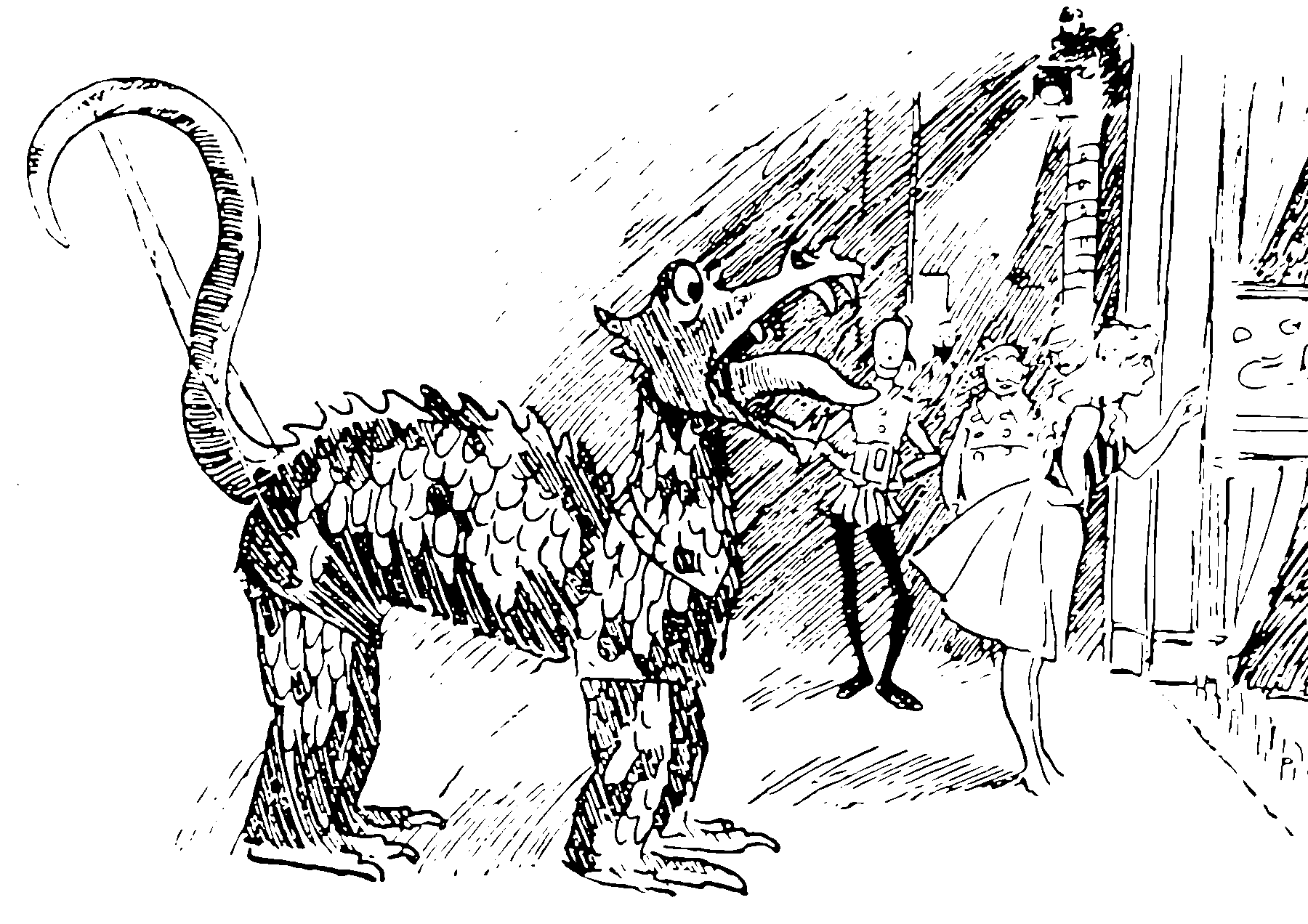

Our cluster uses some job manager pre-dating many modern trends in cloud computing, including the word cloud applied to computing. Perhaps the cluster is based upon Platform LSF, Torque or slurm. Typically I don’t care about the details of this, since I have a vague suspicion that the entire world of traditional HPC is asymptotically approaching 0% market share as measured by share of jobs and the main question is merely if that approach is fast enough to save me. All I know is that my fancy-pants modern cloud-kubernetes-containerized-apache-spark-hogwild-SGD project leveraging some disreputable stuff I found on github, that will not run here in the manner that Google intended. And that if I try to ask for containerization the system responds with the error:

We generally need to work in these environments because typical institutions prefers the

- the feeling of security that comes from buying big things ona 5 year procurement timeline to

- the horrifying prospect of giving staff billing privileges for the cloud.

Like most people, the first time fond myself in such a situtation I read the help documents that the IT staff wrote, but they were targeted at people who had never heard of the terminal before, and in their effort hide complexity also made them essentially useless, in that I could not work out what was going on in between all the baby’s-first-UNIX instructions. So I spent time searching on the internet for the few useful keywords they let slip, and discovered that some other cluster computing researchers occasionally post their discoveries, which I can cargo-cult instructions.

So now I keep notes on how can we get usable calculation from the these cathedrals of computing, while filling up my time and brain space with the absolute minimum that I possibly can about anything to do with their magnificently baroque, obsolescent architecture? Because too much knowledge would detract from writing job applications for hip dotcoms.

UPDATE: I am no longer a grad student. I work at CSIRO’s Data61, where we have HPC resources in an intermediate state: some containerization, some cloud and much SLURM.

1 Job management

See HPC job submission.

2 Software dependency and package management

With high likelihood, the campus cluster is running some ancient decrepit edition of RHEL with too elderly a version of anything to run anything you need. There will be some weird semi-recent version of python that you can activate but in practice even that will be some awkward version that does not mesh with the latest code that you are using (you are doing research after all, right?). You will need to install everything you use. That is fine, but be aware it’s a little different than provisioning virtual machines on your new-fangled fancy cloud thingy. This is to teach you important and hugely useful lessons for later in life, such as which compile flags to set to get the matrix kernel library version 8.7.3patch3 to compile with python 2.5.4rc3 for the itanium architecture as at May 8, 2009 Why, think on how many times you will use that skill after you leave your current job! (We call such contemplation void meditation and it is a powerful mindfulness technique.)

There are lots of package managers and dependency systems I suspect most of them work on HPC machines. I use homebrew and then additional python environments on top of that.

For HPC specifically, I have also had recommended spack

3 Deployment management

DIY cluster. Normally HPC is based requesting jobs via slurm and some shared file system. For my end of the budget this also means requesting breaking up the job into small chunks and squeezing them in around the edges of people with a proper CPU allocation. However! some people are blessed with the ability to request to simultaneously control a predictable number of machines. For these, you can roll your own deploy of execution across some machines, which might be useful. This might work you have a bunch of unmanaged machines not on the campus cluster, which I have personally never experienced.

If you have a containerized deployment, as with all the cloud providers these days, see perhaps containerized deployment solution, Apptainer, if you are blessed with admins who support it.

For traditional clustershell:

ClusterShell is an event-driven open source Python library, designed to run local or distant commands in parallel on server farms or on large Linux clusters. No need to reinvent the wheel: you can use ClusterShell as a building block to create cluster aware administration scripts and system applications in Python. It will take care of common issues encountered on HPC clusters, such as operating on groups of nodes, running distributed commands using optimized execution algorithms, as well as gathering results and merging identical outputs, or retrieving return codes. ClusterShell takes advantage of existing remote shell facilities already installed on your systems, like SSH.

ClusterShell’s primary goal is to improve the administration of high-performance clusters by providing a lightweight but scalable Python API for developers. It also provides clush, clubak and nodeset/cluset, convenient command-line tools that allow traditional shell scripts to benefit from some of the library features.

A little rawer: pdsh:

pdshis a variant of thersh(1)command. Unlikersh(1), which runs commands on a single remote host, pdsh can run multiple remote commands in parallel. pdsh uses a “sliding window” (or fanout) of threads to conserve resources on the initiating host while allowing some connections to time out.For example, the following would duplicate using the ssh module to run hostname(1) across the hosts foo[0-10]:

3.1 Incoming

There is a gradual convergence in progress, between classic-flavoured campus clusters and trendier self-service clouds. To get a feeling for how this happens, I recommend Simon Yin’s test drive of the “Nimbus” system, which is an Australian research-oriented hybrid-style system.