Social factors in information security

Our revealed preference for revealing our preferences

November 21, 2018 — August 7, 2022

Why is confidentiality hard?

One reason infosec is hard is that, like pollution, the incentives are misaligned. It is you you bears the cost of my laziness. Also vice versa, which is even more terrible because I know that if you are anything like me, you are a lazy blob who is taking all kinds of risk with my personal information. After all, it would only serve me right for all the blobby risks I have surely been taking with yours.

Another is that there is a lot of unintuitive weirdness in how to be private, from the terminology, through the bad user interface design via the mathematical complexity, and this engenders confusion and thence despair. Here look how easy and amusing it is to steal a Prime Minister’s data.

A third, I argue, is that the first two are leveraged by spies and wanna-be authoritarian governments and creepy business models to obtain our collusion in particpatory spying on ourselves. Ick.

So, what can we do, practically, to ensure better “herd confidentiality” for ourselves?

I give you permission to fulminate if you can do it amusingly as with Quinn Norton’s Everything is broken. Indeed, there is almost nowhere left to hide. Even better, fulminate amusingly with hope.

It’s hard to explain to regular people how much technology barely works, how much the infrastructure of our lives is held together by the IT equivalent of baling wire.

Computers, and computing, are broken.

For a bunch of us, especially those who had followed security and the warrantless wiretapping cases, the revelations weren’t big surprises. We didn’t know the specifics, but people who keep an eye on software knew computer technology was sick and broken. We’ve known for years that those who want to take advantage of that fact tend to circle like buzzards. The NSA wasn’t, and isn’t, the great predator of the internet, it’s just the biggest scavenger around. It isn’t doing so well because they are all powerful math wizards of doom.

The NSA is doing so well because software is bullshit. […] Managing all the encryption and decryption keys you need to keep your data safe across multiple devices, sites, and accounts is theoretically possible, in the same way performing an appendectomy on yourself is theoretically possible.

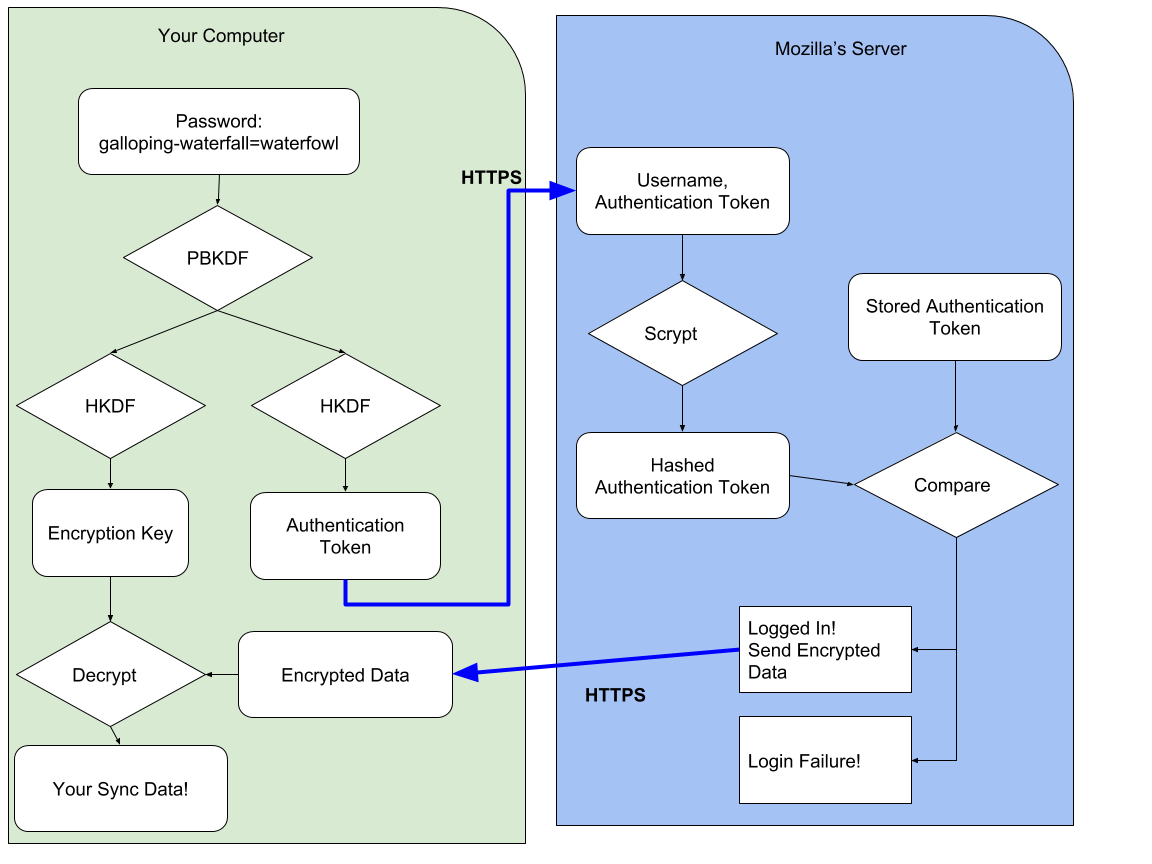

I mean, information technology is complicated. look at what is involved in a SMS.

Or practically, as with Chris Palmer’s State of Security, which points out the specifics of our leaky-sieve information tools. Or Mangopdf stealing a Prime Minister’s passport number.

Here is a lucid explanation by Ross Anderson:

information insecurity is at least as much due to perverse incentives. Many of the problems can be explained more clearly and convincingly using the language of microeconomics: network externalities, asymmetric information, moral hazard, adverse selection, liability dumping and the tragedy of the commons. […]

In a survey of fraud against autoteller machines, it was found that patterns of fraud depended on who was liable for them. In the USA, if a customer disputed a transaction, the onus was on the bank to prove that the customer was mistaken or lying; this gave US banks a motive to protect their systems properly. But in Britain, Norway and the Netherlands, the burden of proof lay on the customer: the bank was right unless the customer could prove it wrong. Since this was almost impossible, the banks in these countries became careless. Eventually, epidemics of fraud demolished their complacency. US banks, meanwhile, suffered much less fraud; although they actually spent less money on security than their European counterparts, they spent it more effectively.

1 Cryptography for normal people

Slamming PGP and the cryptopunk model of human behaviour it presumes, that is a cottage industry. Indeed in practice, encrypting is hard. But also look at the systems it is embedded in.

Matthew Green on why it is time for PGP to die. Secushare on 15 reasons they do not like PGP for normal humans.

I would put it differently; I think PGP is useful; However the cyberpunk uses that everyone though PGP would be for in the late 90s have not panned out; we do not all encrypt our email that way. I get the impression that PGP developers are frantically churning out new updates and modes of use and wondering why we are all complaining about how the version from 20 years ago was a bad idea. Anyway, usability is important and even if the PGP people are not to blame, there are some bad assumptions baked in.

GPG and HTTPS (X509) are broken in usability terms because the conceptual model of trust embedded in each network does not correspond to how people actually experience the world. As a result, there is a constant grind between people and these systems, mainly showing up as a series of user interface disasters. The GPG web of trust results in absurd social constructs like signing parties because it does not work and creating social constructs that weird to support it is a sign of that: stand in a line and show 50 strangers your government ID to prove you exist? Really? Likewise, anybody who’s tried to buy an X509 certificate (HTTPS cert) knows the process is absurd: anybody who’s really determined can probably figure out how to fake your details if they happen to be doing this before you do it for yourself, and of the 1500 or so Certificate Authorities issuing trust credentials at least one is weak or compromised by a State, and all your browser will tell you is “yes, I trust this credential absolutely.” You just don’t get any say in the matter at all.

[…]

The best explanation of this in more detail is the Ode to the Granovetter Diagram which shows how this different trust model maps cleanly to the networks of human communication found by Mark Granovetter in his sociological research. We’re talking about building trust systems which correspond to actual trust systems as they are found in the real world, not the broken military abstractions of X509 or the flawed cryptoanarchy of GPG.

See also I went to the same school as Julian Assange but we learned different lessons.

When someone says “assume that a public key cryptosystem exists,” this is roughly equivalent to saying “assume that you could clone dinosaurs, and that you could fill a park with these dinosaurs, and that you could get a ticket to this ‘Jurassic Park,’ and that you could stroll throughout this park without getting eaten, clawed, or otherwise quantum entangled with a macroscopic dinosaur particle.”