Institutions and governance for mass conversation

Discourse red in tooth and claw versus the sovereign

February 1, 2020 — May 30, 2023

Content warning:

Discussion of hate-speech, various internet censorship flashpoints including for example sexual violence; links to critics of speech norms from diverse places on the political spectrum

“Freedom of speech.” “Political correctness”. “Cancel culture.” “Safe spaces”. “Woke police”. My current working theory to explain the function of these terms is that in contemporary usage they predominantly are a kind of natural by-product of humans encountering massively networked communication and trying to bring traditional mental models to bear on it, but they do not necessarily correspond to anything concrete; they are invasive argument. Upon first approximation “freedom of speech” looks like something that one person uses to accuse a second person has deprived them of it, but I think people want it to mean more than that. If you expose virtually any common pre-existing western ideology to the realities of modern discourse its proponents will find themselves vexed, confused and eventually emit this phrase as a pain response.1

What free speech, for example, might mean in the classic public sphere of Habermas is relatively clear, I think. What it means as you are gathering about the death bed of your enfeebled grandmother is clearly a different thing and probably also clear. What it means in the era of micro-targeted filter bubbles might seem to be similarly clear, but only IMO to people who do not think about it. I cannot help but feel that even talking about “freedom of speech” in that context fails to cleave reality at the joints, and because of that lack of clean efficiency, plays a role only in someone else’s tired rhetorical strategy.

The semantics of free speech I thus don’t find particularly compelling or useful as a starting point to understand what is going on ten burns deep into a twitter beef, but because of the rhetorical environment we inhabit, I am required regularly to opine about it, so here are some links. Some will inevitably fall into the trap of treating these concepts as well-defined in even adverse circumstances, for which I apologise in advance; it seems to be natural for humans to think of difficult subtle situations as righteous struggles against obvious maleficence.

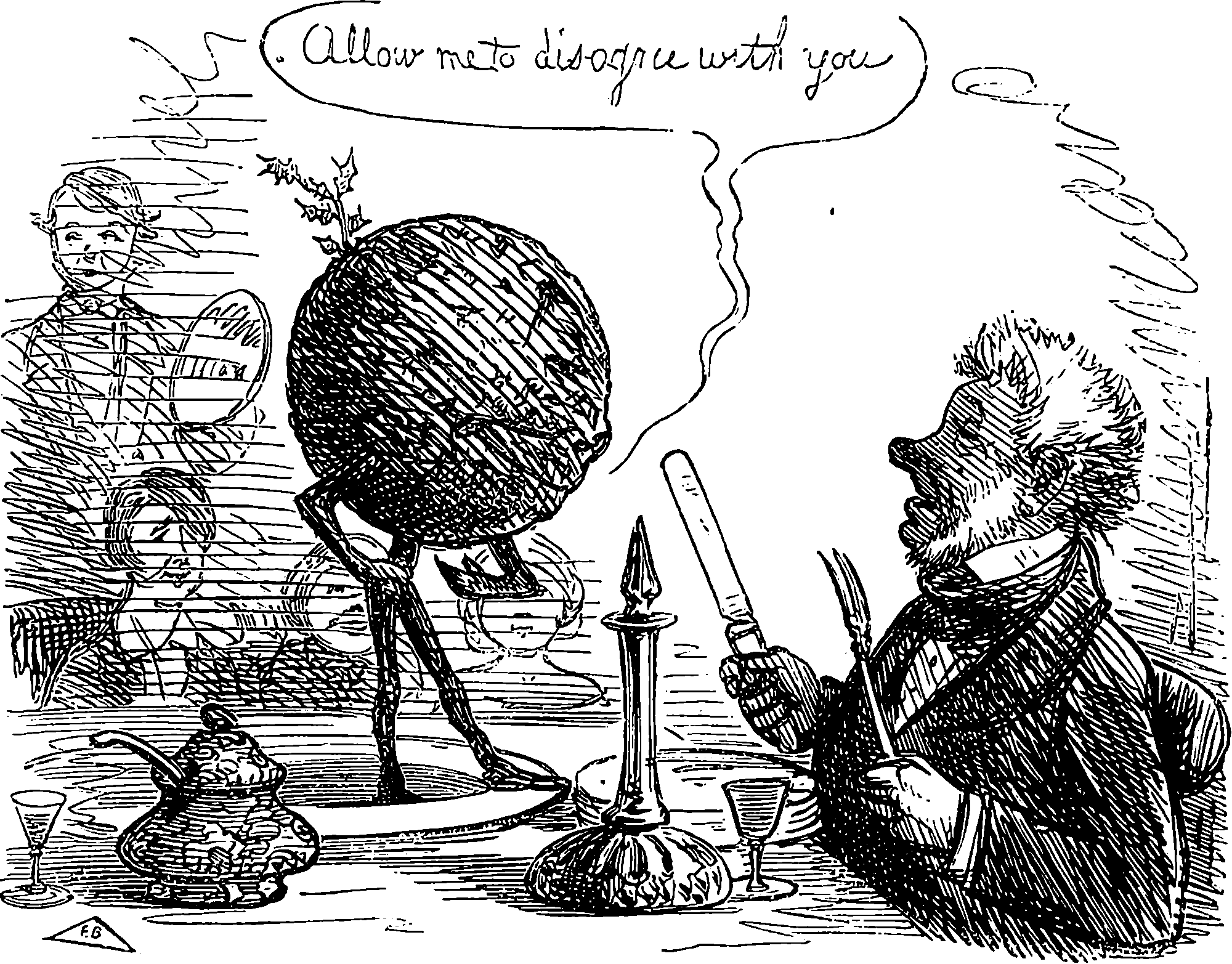

So, back to our buzzwords. What are these things? Who has them? Will they give them back? Have any of these things gone mad? Is renaming things that sound bigoted reverse bigotry and if so, when? Whither enlightenment? Whence liberalism? Where thought police? Why public shaming? How riot? Wow trolling. Are you only saying that because you are in the pocket of Big Word? Are golliwogs banned? Is that a thing? Does it matter if so?

1 What are we arguing about this time?

Maybe I should ditch this page entirely and consider only public opinion dynamics?

A Letter on Justice and Open Debate kicked off one recent iteration of free speech discussion. Techdirt’s Mike Masnick, reads it as ineffectual or possibly counterproductive posturing and dissects the issue into some useful categories, although I shy from endorsing his overall conclusion. Sean Carroll is not a fan of the Harper’s letter either. Oliver Traldi would like the argument for the entailments of speech consequences to be more inclusive.

Thanksgiving squabbles are a feature, not a bug

Cutting closer to the quick, IMO, than any of the above. Lili Loofbourow analysed this propensity from the trenches in the way by suggesting we those who do not live on twitter all day are misunderstanding the mechanisms of infowar:

I get the longing—I even share it—but the naivete is annoying. Online pundits should know (and factor in) that social media as a “public square” where “good faith debate” happens is a thing of the past. Disagreement here happens through trolling, sea-lioning, ratios, dunks.

Does that lead to paranoid readings and meta-debates that seem totally batshit to onlookers who aren’t internet-poisoned? Yup! “All Lives Matter” sounds perfectly reasonable—as a text—unless you know the history of that discourse. (And you’ll sound pretty weird explaining it.)

“Why would you refuse to debate someone who’s simply saying that All Lives Matter?” is the kind of question an Enlightenment subject longing for a robust exchange of ideas might ask. Well, the reason is that most of us know, through bitter experience, that it’s a waste of time.

It wouldn’t be a true exchange. We know by now what “All Lives Matter” signals and that what it signals is orthogonal to what it says. Your fluency in this garbage means you take shortcuts: you don’t have to refute the text to leap to the subtext, which is the real issue.

To outsiders, that leap will look nuts. That’s obviously what all the coded Nazi shit is for and about—the 14 words, the numbers, the OK hand sign that both is and isn’t a white power sign, the Boogaloo junk. They’re all ways to divorce surface meaning from intentional subtext.

Yes, this is bad for discourse! Yes, it inhibits intellectual exchange! Yes, it makes productive dissensus almost impossible. But that’s not because of “cancel culture” or “illiberalism”. It’s because in this discourse environment, good faith engagement is actually maladaptive.

It’s possible and likely that knowledge gaps between people who are online too much and folks who aren’t are making things worse. If Atwood (or whoever) isn’t online much, she might be shocked to see people accuse a nice-looking boy in a Hawaiian shirt of wanting a 2nd civil war.

It might indeed look like cancel culture gone mad. He’s just standing there! Civilly! Offering support to Black Lives Matter protesters, of all things! Can’t we all, whatever our disagreements, come together in support of a good cause?

It’s also possible that people who’ve learned to read through stuff (to whatever bummer of a subtext we’re used to finding there) sometimes overdo it. Some of us might reflexively ignore the actual text—fast-forwarding to the shitty point we “know” is coming even if it isn’t

“Free speech defender,” for ex, will mean something different to an idealist than it will to someone who watched reddit hordes viciously defend revenge porn and sites like

r/beatingwomen,r/creepshots, andr/Jewmericawhile people whose pictures got posted there begged for help.Free speech! they were told.

Anyway. Sure, good-faith debate would be nice. Instead, the internet pressure-cooked rhetoric. Again: people can watch the same argument be conducted a million times in slightly different ways, and that’s interesting, and a blessing, and a curse

It produced a kind of argumentative hyperliteracy. If you can predict every step of a controversy (including the backlash), it makes perfect sense to meta-argue instead—over what X really means, or implies, or what, down a road we know well, it confirms.

This isn’t great. People talk past each other, assume bad faith. But it’s not the fault of “illiberalism” that good faith is in short supply. And if that’s where your analysis begins, I can’t actually tell whether you’re naive or trolling. And I’m no longer sure which is worse.

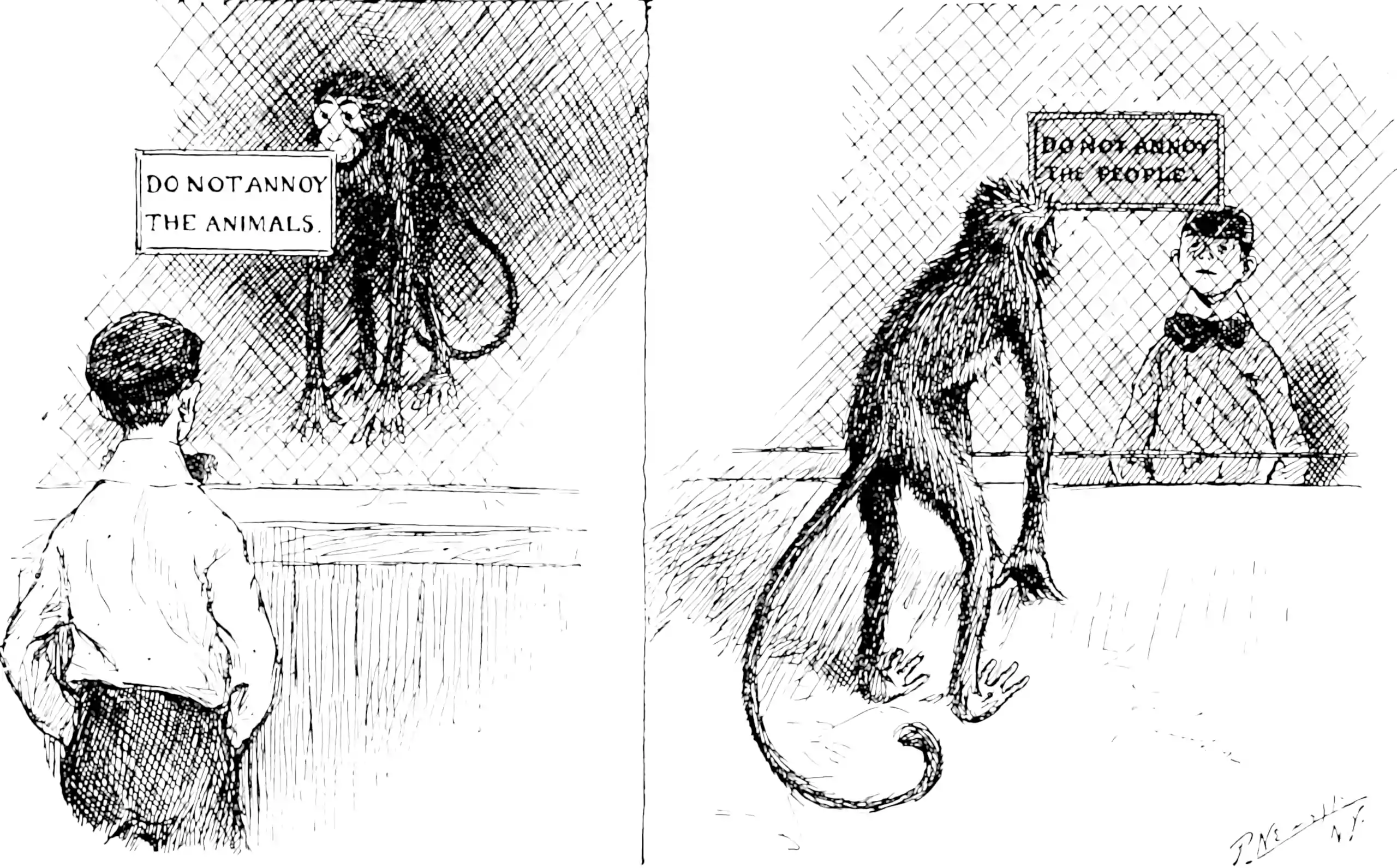

There is a lot going on in this analysis and I want to reframe it in terms of what it crystallised for me. One implication for me is “In these kind of debates, there is a red queen problem. We are all constantly getting onboarded by being used as cannon-fodder on someone else’s rhetorical battlefield”. This leads me to wonder: Do we even need any malice for this effect to arise? Obviously there is some out there, but it is even necessary? Is the mechanism of mass communication itself sufficient, at least as we currently structure it? Take any blow-up (here’s one, PAX being raped to sleep by dickwolves), which is about some people being cross at one another in the video games world, in which the author thinks the other side is being malicious. Maybe they are, collectively, in that author’s framing. But it is easy to imagine more than that, that no-one individually could be behaving with anything but the best of intent and everything would still be terrible. I mean, more plausibly, most participants are behaving with the usual human mix of thoughtlessness, laziness, obstreperousness and incompetence, and mediocre intentions, and maybe there are a couple of trolls. But whatever, I think it is possible that even the best intentions and the best competence would not save this kind of epiphenomenon from arising, that (to a degree) the malice of sides does not depend upon malice of individuals.

Rather the group dynamics of asking people to be nice to one another, or productive just don’t scale up in that way. We have mental models based around our success with resolving disputes between peers and kin, and it just does not even make sense in the absence of the shared understanding represented by the group. Maybe there is no response to two people feeling hurt in this context that leads to them sorting it out, now that hundreds of bystanders are invested in it in their own ways. Maybe when 500, or 50,000 individual are invested, the notion of coming to a mutual personal understanding is simply ill-posed, at least on the scale of a human lifetime. Does that seem plausible?

This is a kind of Dunbar number argument for social cohesion. We assume that for humans, trust-building and group-coordinate via conversation and mutual accommodation between \(N\) individuals takes a certain amount of time, say \(N\times x\), and that when you run out of free hours in the day, the wheels fall off. Instead two more subgroups will form instead, and then per default explain their failure to coordinate with the other group in terms of the other group being arsehats. That is, I am supposing that the best case dynamic under a naïve social structure might still be acrimony. Throw in a few agents provocateur, bona fide arsehats and sociopaths and things could be worse.

If we return to Loofbourow’s analysis again, about weaponised debate, might have a yet more negative take of what free speech might be in the ill-understood big-tent of technologically-mediate discourse: A term that, ince invoked, makes an awkward conversation into one with sides and especially at least one losing side.

Pop summary, Online Trolls Also Jerks in Real Life: Aarhus University Study:

“We found that people are not more hostile online than offline; that hostile individuals do not preferentially select into online (vs. offline) political discussions; and that people do not over-perceive hostility in online messages,” the researchers wrote. “We did find some evidence for another selection effect: Non-hostile individuals select out from all, hostile as well as non-hostile, online political discussions.”

Disclaimer: I have not read their methodology. But the summary suggests an alternative effect: that online discussions feel hostile because only hostile people hang around to participate in them.

A more invective-flavoured analysis is Venkatesh Rao’s internet of beefs:

Conflict on the IoB is not governed by any sort of grand strategy, or even particularly governed by ideological doctrines. It is an unflattened Hobbesian honor-society conflict with a feudal structure, at the heart of which is an involuntarily anonymous, fungible, angry figure desperate to be seen as significant: the mook.

The semantic structure of the Internet of Beefs is shaped by high-profile beefs between charismatic celebrity knights loosely affiliated with various citadel-like strongholds peopled by opt-in armies of mooks. The vast majority of the energy of the conflict lies in interchangeable mooks facing off against each other, loosely along lines indicated by the knights they follow, in innumerable battles that play out every minute across the IoB.

Almost none of these battles matter individually. Most mook-on-mook contests are witnessed, for the most part, only by a few friends and algorithms, […] In aggregate though, they matter. A lot. They are the raison d’être of the IoB.

The standard pattern of conflict on the IoB is depressingly predictable. A mook takes note of a casus belli in the news cycle (often created or co-opted by a knight, and referred to on the IoB as the outrage cycle), and heads over to their favorite multiplayer online battle arena (Twitter being the most important MOBA) to join known mook allies to fight stereotypically familiar but often unknown interchangeable mook foes. They come prepared either to melee within the core, or skirmish on the periphery, either rallying around the knights riding under known beef-only banners, or adventuring by themselves in unflagged, unheralded side battles.

Adam Elkus, Speedrunning Through The Language-Game:

Make an outrageous and frivolous statement (or amplify one), and then turn the tables on the people who react to it. Point it out and you may encounter outright denial or convoluted hand-waving. Inevitably, the person in the weakest position is he or she that “believes in words”. It is common for people to say insane things simply for attention (its just clickbait!), or justify them by referring to layers of meta-positioning that renders ambiguous what they actually believe (it’s signaling, its opening the Overton Window, etc). That is, assuming that “belief” is a meaningful category at all in an era of advertising and PR. And often it is less sinister than merely bizarre and absurd.

Another tendency of interest was explained neatly by Tanner Greer, for the particularly important case of opinion leaders and pundits and how they are by definition especially likely to be detached from typical experience. Our think pieces about how the world works are likely to come from pundits with a public profile, that being what a public profile is, and the world inhabited by such people is different than the world that the majority inhabit. We are getting our models of the world from people in a special bubble of experiential bias.

This is the first difficulty that comes with a growing follower count on twitter. As the count grows, the number of different communities you are projecting to grows as well. Soon, large numbers of people start to follow because they see you as a representative of a certain strain of thought, or as a key voice in a particular conversation they care about. They are not sympathetic to your ideas or even merely intellectually interested in them; instead they follow you to keep tabs on what you and people like you are saying. Many actually despise you and your ideas to their core (in twitterese, they are a “hate follow”).

My friend Matthew Stinson described this shift as that point where “interactions stop being inquisitive and start getting accusatory. “Points for my side-ism” becomes a real thing.” Twitter’s retweet mechanism makes this problem far worse. All one needs is a snarky RT for these people to take what a thought they dislike and BOOM!, project it into communities it was never intended for as the perfect example of what they all should be hating at that moment.

Thus if you have a large follower account your experience on twitter goes like this: you share a thought optimized for Group X. Members of Group Y, Group Z, and Group V automatically start sharing it as the textbook example of why Group X deserves crucifixion.

Other questions are: Is creating a petition to have someone’s professional recognition revoked censorship? Is publish shaming free speech? Is de-platforming censorship? Is presenting both sides neutral? Is Enlightenment liberalism a coherent and desirable thing?

As far as I am concerned the answer to all of those is clearly I need more context please, which is not a fun or useful answer. Introducing the terminology of right to free speech (or the justness of cancellation) does not simply matters and allow us to analyse things more clearly in public discourse in the age of sound-bytes, trolling and bots.

Possibly a question about how to optimise discussion would be more useful. Looking at models of emergent behaviour under certain argument dynamics could be useful, and deciding what type of public discourse system we would all buy in to in order to optimise for correctness, or innovativeness, or robustness, or emotional safety or something.

Case study: this recent blog post on petitioners trying to remove professional honours from Steven Pinker for saying some stuff, which instantly demonstrated to me power of online discussion to buzz-saw that your hand off if touch it. First, mostly we agreed that we all like Enlightenment virtues, whatever they are, then we accused everyone else of not adhering to said virtues in our interpretation, then we all took a turn fulminating publicly about the other side, and misunderstanding strategically/performatively/lazily. This is not, by far, the worst example of this dynamic, it is just that this is taking place in a corner of the internet beloved of thoughtful nerds who one would hope can do good debate if anyone can. Lesson: the internet seems not to provide the right structure for fruitful debate in even favourable circumstances.

In this gumbo of weaponized rhetoric and radicalization… what?

At what scales can we address free speech etc? TBD. In traditional media, probably we historically had an idea how “speech” might be “free”.

The concepts that make sense for the social media moshpit are still evolving.

2 Free as in beer

TODO: Observe that in the attention economy, demanding freedom of speech might indeed the same as demanding in the real economy, which is to say, probably not the optimal price. We only have so much attention to go around, and we do not want it flooded with the spam of contextless internet drive-bys.

What are the business models that cost this?

3 On white, het, neurotypical males arguing for free speech norms

I acknowledge that as a white guy I come from a group that might on average be expected to advocate for broader free speech norms than some people, because I am less likely to get myself threatened for holding my views than someone who visibly belongs to a disadvantaged group. Indeed, I think that it is plausible that I will never experience the full range of precarity and threat from uncomfortable discussions that a traumatised and/or marginalised person would. That is, I am less affected by certain negative consequences of this position than many people it affects. These days, ad hominem is an accepted argument, and so that requires a defence.

Here are some defences.

- I personally hold other positions I endorse that would likely affect me more than average (progressive taxation, interventions for equity for the disadvantaged,…) which I present as evidence that I do not argue purely for things that happen to suit my tastes and direct personal convenience

- Empirically, telling people to remain silent entirely because of who they are makes them angry and more likely to act out, and so it is not a good strategy for achieving the goals of the people who are telling them to be silent. Ergo, I think that blanket policies that tell white, cishet, neurotypical males to shut up are likely to backfire. Norms about specific bad behaviour that is correlated with white, cishet, neurotypical maleness might be much more viable, if that is your jam.

4 Sapir-Whorf politeness

See cheap talk.

5 Phục Scunthorpe problems

How could we lubricate discourse? Banning harmful stuff? Scunthorpe problems are a stylised example of the problem of discourse moderation at large, especially automatic moderation.

Algospeak refers to code words or turns of phrase users have adopted in an effort to create a brand-safe lexicon that will avoid getting their posts removed or down-ranked by content moderation systems. For instance, in many online videos, it’s common to say “unalive” rather than “dead,” “SA” instead of “sexual assault,” or “spicy eggplant” instead of “vibrator”.…

When the pandemic broke out, people on TikTok and other apps began referring to it as the “Backstreet Boys reunion tour” or calling it the “panini” or “panda express” as platforms down-ranked videos mentioning the pandemic by name in an effort to combat misinformation. When young people began to discuss struggling with mental health, they talked about “becoming unalive” in order to have frank conversations about suicide without algorithmic punishment. Sex workers, who have long been censored by moderation systems, refer to themselves on TikTok as “accountants” and use the corn emoji as a substitute for the word “porn”. …

Euphemisms are especially common in radicalized or harmful communities. Pro-anorexia eating disorder communities have long adopted variations on moderated words to evade restrictions. One paper from the School of Interactive Computing, Georgia Institute of Technology found that the complexity of such variants even increased over time. Last year, anti-vaccine groups on Facebook began changing their names to “dance party” or “dinner party” and anti-vaccine influencers on Instagram used similar code words, referring to vaccinated people as “swimmers”.

C&C Alexandra S. Levine’s article in Forbes which has more political speech examples.

Intriguing gallery of deleted images: Zuck Got Me For. Casts a different light on Against Dog Whistle-ism, no?

Hasan Piker Banned From Twitch for Saying ‘Cracker’

Adam Elkus, Welcoming Our New Robot Overlords:

Once upon a time — just a few years ago, actually — it was not uncommon to see headlines about prominent scientists, tech executives, and engineers warning portentously that the revolt of the robots was nigh. The mechanism varied, but the result was always the same: Uncontrollable machine self-improvement would one day overcome humanity. A dismal fate awaited us. We would be lucky to be domesticated as pets kept around for the amusement of superior entities, who could kill us all as easily as we exterminate pests.

Today we fear a different technological threat, one that centers not on machines but other humans. We see ourselves as imperiled by the terrifying social influence unleashed by the Internet in general and social media in particular. We hear warnings that nothing less than our collective ability to perceive reality is at stake, and that if we do not take corrective action we will lose our freedoms and way of life.

6 Neurodiversity and the public sphere

🏗️

The Neurodiversity Case for Free Speech

6.1 Autism spectrum

Autism/allism in communication is clearly super interesting. Would love to return to it some time. For now, the status451 take, Splain it to Me is interesting. See autism/alliss.

6.2 Trigger warnings

Another invasive argument To my meagre understanding, the point of trigger warnings it to help Post Traumatic Stress Disorder sufferers manage their symptoms. But providing trigger warnings is also a hot-button issue about which the internet is reliably mad. I am definitely not across it why it is a hot button issue, although I get the sense that the debate is one of those sprawling internet tar pits that I should relish being able to be ignorant of while I can.

Amna Khalid and Jeffrey Aaron Snyder argue, based on (Jones, Bellet, and McNally 2020), that evidence does not support the hypothesis that trigger warnings reduce trauma in PTSD sufferers in particular, nor people in general, and so are probably not helpful in educational context. See also Colleen Flaherty, New study says trigger warnings are useless. Does that mean they should be abandoned? which summarises (Sanson, Strange, and Garry 2019).

I am not quite clear what the divide is supposed to be between Trigger warnings and the classic content warnings as seen in workaday media classification systems (only suitable for minors accompanied by an adult, etc). Is the distinction that trigger warnings presume that the reader is a PTSD sufferer? Anyway, it doesn’t seem controversial that we provide parental guidance warnings to manage children’s access to content that society classifies as inappropriate to children. It does not seem that much of a stretch to regard it as compassionate to enable individual adults to likewise to likewise manage a burden psychologically difficult content for themselves, regardless of whether it is helpful or not. Once again, I presume that the internet would not be mad if the crux of the argument were anything so simple.

Side question: does classic content classification help children in any verifiable sense?

7 Metaphor for communicative norms

See intelligibility as compatibility for an analysis of speech in terms of technical standards.

8 What would a functional public sphere look like?

There are a few projects like this about, attempting to be the liberal discourse they wish to see in the world:

Persuasion is a publication and community for everyone who shares three basic convictions:

- We seek to build a free society in which all individuals get to pursue a meaningful life irrespective of who they are.

- We believe in the importance of the social practice of persuasion, and are determined to defend free speech and free inquiry against all its enemies.

- We seek to persuade, rather than to mock or troll, those who disagree with us.

In the past years, the political and intellectual energy has been with illiberal movements. Too often, the advocates of free speech and free institutions have been passive, even fatalistic. It is high time for those of us who believe in these enduring ideals to stand up for our convictions.

Or, more exotic for me, Open Source Defense — “100% gun rights, 0% culture war”.

Tim Sommers, This Title Only Appears to Convey Information on some issues in posturing around rights and marginalisation in free speech, channeling Leiter (2016).

We’ve got plenty of speech. We’ve got precious little public agreement on basic facts. The reign of massive amounts of unregulated free speech has not led to democracy, it’s led to a world of “alternate facts”.

If we want free speech that contributes to a marketplace of ideas then, despite the risks it poses to expressive autonomy and the frightening question of exactly who is going to do the regulating, we’ve got to find ways to regulate it.

Jonathan Haidt stuff is worth mentioning. I was not especially taken with his previous models of morals, but his diagnoses of social media are interesting. His solutions need some polish IMO, but the collective research review is worth the price of admission.

Tanner Greer’s redjoinder is also interesting.

8.1 Management of the discourse commons

A powerful idea that I want to return to, in public sphere business models.

8.2 Inclusive governance for speech acts

It gets much harder when the problem is write large. What may we say about the speech of thousands of people we will never meet or consult about what it is harmful for them to say to audiences that they may never consult? For a start this is normally a tokenism/table stakes problem, i.e. one where everyone disagrees about how simple it truly is. But how we would improve the governance here is beyond me.

Let us pick an innocuous case, the Inclusive Naming Initiative. This is a small-stakes example, about which I personally have no strong feelings (I confess to mild sceptical feelings about the process, which I will expand upon), which has the great virtue of documenting their own shortcomings. About that I do have strong feelings: which is rare, and brave and should be celebrated. They maintain a denylist of words that are not allowed in software, upon pain of being excluded from various software distributions and thus failing to achieve their project goals.2

TBD. I am interested in the kind of example this represents. I should explain what the document actually does, but I’m just dumping some notes for later.

Pro:

- Represents a concession to people who might reasonably feel they have been offered few concessions

- Documents the various ways that language can be hurtful, which TBH had some things in that had never occurred to me before.

- High transparency; e.g. openly explains that this is a contingent, contextual and changing list designed to accommodate the cultural norms of a particular Anglophone community.

Con:

- the vast majority of the stakeholders on either side of this issue had no say in the decisions made for them.

- The price is born predominantly by small volunteer community groups even though it was decided largely by large corporations.

- Despite pro #3, makes no accommodation for cultures other than its particular target audience.

The pair of pro and con #3 is a beautiful example.

The underlying assumption of the whitelist/blacklist metaphor is that white = good and black = bad. Because colors in and of themselves have no predetermined meaning, any meaning we assign to them is cultural: for example, the color red in many Southeast Asian countries is lucky, and is often associated with events like marriages, whereas the color white carries the same connotations in many European countries. In the case of whitelist/blacklist, the terms originate in the publishing industry—one dominated by the USA and England, two countries which participated in slavery and which grapple with their racist legacies to this day.

They have shown their working; they reassure me that I could not have deduced the badness of this word from its history or usage if I am not immersed in the history of the UK or the US. To be honest, I am pretty sure that most of the anglophone world has used black in a similar way.

Also, it is possible that substantive number people have real and pressing distress cause by wanton use of the word blacklist, sufficient that it is reasonable to ask that a concession is made by global developer communities for their benefit.

On the other hand, they have made no attempt to cost the code hours that will be required to introduce these changes. Non-technical people often assume it is a simple matter of search and replace; Examining a typical open source project attempt the sanitise its code shows that it is often a tortuous and expensive sacrifice of volunteer labour.

There is no divinely appointed correct way of weighing up the relative costs and benefits to the affected communities. I certainly do not consider myself qualified to measure the relative harm. I have no skin in that game.

It is possible to imagine that if the communities who did have skin in this game were in communication with one another, they might come to an agreement about mutual concession that they would make to each other’s sensibilities, perhaps compromises going forward, and maybe to reciprocate on future matters of interest. And so the process of coalition building would go on.

But there is no channel of communication, no chance for mutual consent, no process to solicit consent. Not between hundreds of thousands of stakeholders who are not in touch with most of the other participants. The experience for the vast majority of people involved in this (admittedly low stakes) battle is ultimately one of appealing to an external authority to pick a winner in the governance of the shared discourse commons. I do not know how to improve this process, but it feels unsatisfactory.

9 Context collapse

There was a word I was looking for here, Context collapse (Brandtzaeg and Lüders 2018; Davis and Jurgenson 2014; Georgakopoulou 2017; Marwick and boyd 2011; Pagh 2020):

- Understanding Context Collapse Can Mean a More Fulfilling Online Life

- Online Identity - Is Your Social Media Persona Real?

- “It’s Not Cancel Culture—It’s A Platform Failure.”

- Twitter, the Intimacy Machine

I think I had heard this term before but it began to feel newly relevant after I encountered this Tressie Mcmillan Cottom interview:

One of the things I like to say to people is that we think that broadening access in any realm — we do this with everything, by the way. It’s such an American way to approach the world — we think that broadening access will broaden access on the terms of the people who have benefited from it being narrowed, which is just so counterintuitive.

Broadening access doesn’t mean that everybody has the experience that I, privileged person, had in the discourse. Broadening it means that we are all equally uncomfortable, right? That’s actually what pluralism and plurality is. It isn’t that everybody is going to come in and have the same comforts that privilege and exclusion had extended to a small group of people. It’s that now everybody sits at the table, and nobody knows the exact right thing to say about the other people.

Well, that’s fair. That means we all now have to be thoughtful. We all have to consider, oh, wait a minute. Is that what we say in this room? We all have to reconsider what the norms are, and that was the promise of like expanding the discourse, and that’s exactly what we’ve gotten.

Connection to cheap talk.

TODO: Touch upon: context of harmful words. You don’t want to discuss a rape without giving sexual abuse survivors the option to tap out to aggravate trauma. You don’t want to discuss vomiting in front of one of my sympathetic vomiting friends (unless you want a lap full of vomit). Too much tweeting GAMESTONK and you are manipulating the stock market. If I play my massive sound system outside your house I probably want to check that it’s OK with you. At the same time, the words that are harmful in one context are necessary in another.

In the examples are above there are some easy cases where talking about the thing is obviously a great idea; A sexual abuse survivor needs to represent their experiences to a counselor or a court of justice. My vomity-friend might need to talk to a doctor about that some times. I am pleased to be able to mention various stocks without giving financial advice. If no-one ever plays a sound system, we will never get to dance. In these contexts the communication is not harmful, or the harm is balanced by a benefit.

OK, but the above contexts are easy. In a room full of friends we can easy work out who is queasy. Abuse survivors can communicate their needs to trusted, ideally. You can ask your neighbours about the sound system, and so forth.

What about when the room has 8 billion people in it? What about a room which usually has your friends in but sometimes has millions of people in? Is Twitter designed to stoke outrage by being precisely that?.

10 Asymmetric costs in communication

There are many framings of this idea. DRMacIver’s note on The costs of being understood is my favourite. The one that predominates is not that though. Most of us are more familiar with…

10.1 Microgaggressions

A bad name for an important problem, which is in itself, appropriately enough, a problem partly about bad names.

See microstressors.

11 Extremist speech

I am curious about the empirical research about the harm done by extremist speech. I would also like to know about radicalisation. For now a single article linking to some articles I would like to know about: How Much Real-World Extremism Does Online Hate Actually Cause?.

In 2013, the RAND corporation released a study that explored how Internet usage affected the radicalization process of 15 convicted violent extremists and terrorists. […]

The researchers found that the Internet generally played a small role in the radicalization process of the individuals studied, though they did find support for the idea that the Internet may act as an echo chamber and enhance opportunities to become radicalized. However, the evidence did “not necessarily support the suggestion that the internet accelerates radicalization, nor that the internet allows radicalization to occur without physical contact, nor that the internet increases opportunities for self-radicalization, as in all the cases reviewed … the subjects had contact with other individuals, whether virtually or physically”.

The limited empirical evidence that exists on the role that online speech plays in the radicalization-to-violence journey suggests that people are primarily radicalized through experienced disaffection, face-to-face encounters, and offline relationships. Extremist propaganda alone does not turn individuals to violence, as other variables are at play.

Another analysis compiled every known jihadist attack (both successful and thwarted) from 2014 to 2021 in eight Western countries. “Our findings show that the primary threat still comes from those who have been radicalized offline,” the authors concluded. “[They] are greater in number, better at evading detection by security officials, more likely to complete a terrorist attack successfully and more deadly when they do so.”

12 Incoming

More morsels? Here are some think-pieces.

Elizabeth Harman, on academic freedom, argues that racist research must be named and allowed:

I do want to make an important concession. Should we subject our norms of what counts as research misconduct to ongoing scrutiny? Absolutely. Should that ongoing scrutiny take our society’s pervasive racism into account? Yes. But academic freedom must continue to protect much research that is immoral in one or another way. This immorality needs to be recognized and discussed, but not punished.

Here are yet more.

- Moderation Is Different From Censorship

- Scholar’s stage on twitter dynamics

- Julia Galef’s Free speech roundup.

- Robin Hanson’s throwaway line about costing free speech.

I would like to have a link on the difficulties of coordinating on justice via mob rule, public shaming and petitions, wherein we look at the coordination problems, and separate the problem of identifying injustice/inefficiency/existential threat, and the problem of calibrating a political response. Sideline in altruistic punishment and feedback loops. TBD.

-

Good articulation off a point that we seem to find challenging to remember:

Imagine you’re at a dinner party, and you’re getting into a heated argument. As you start yelling, the other people quickly hush their voices and start glaring at you. None of the onlookers have to take further action—it’s clear from their facial expressions that you’re being a jerk. In digital conversations, giving feedback requires more conscious effort. Silence is the default. Participants only get feedback from people who join the fray. They receive no signal about how the silent onlookers perceive their dialogue. In fact, they don’t receive much signal that onlookers observed the conversation at all. As a result, the feedback you do receive in digital conversations is more polarized, because the only people who will engage are those who are willing to take that extra step and bear that cost of wading into a messy conversation.

Michele Coscia, Avoiding Conflicts on Social Media Might Make Things Worse

All Minus One an Australian ❄️🍑 club.

-

The news that Arif Ahmed is to be appointed the UK’s first ‘Free Speech Tsar’ — a position that apparently comes with “the power to investigate universities and student unions in England and Wales that wrongly restrict debate” and to “advise the sector regulator on imposing fines for free speech breaches” — is disappointing for various reasons. One of them (not the most important one) is that it suggests Britain’s capacity to name things continues to decline. To see this once-great country reach for a foreign title that not only originates with one second-rate empire trying to recall the glory of the Romans but that was first popularized as a job-title within the administrative apparatus of another is really quite sad, given that England has so many equally preposterous but largely home-grown (or at least Norman French) titles available right on its own doorstep…

Here I present a few alternatives […] As an alternative to “Free Speech Tsar”, consider one or more of the following: The Duke of Discourse. Warden of All Chit-Chat. Equerry of Arguments. Gold Stick To The Point. The Earl of Axiom. The Keeper of the King’s Premises. The Wheedle Beadle. Chief Constable Counterexample. The Postillion of Positing. The Justiciar of Just One More Thing. The Marquess of My Question is More of a Comment. The Archbishop of Banterbury. The Lord Privy Sealion. Groom of the Discourse. Chancellor of the Factchequer. Red Herring Pursuivant. Master of the Eyerolls.

As should be clear, the capacity exists within the UK to develop an entire peerage system of Free Speech aristocrats of all ranks. I strongly encourage Rishi Sunak to implement just such a scheme as soon as is practicable.

-

if we’re going to take free speech seriously, we have to start thinking about it outside the forms given to us by people like Elon Musk.

Costs of speech: billboards kill people (Hall and Madsen 2022)

James Huffman, The Cancellation of Bertrand Russell

Substack doesn’t like Nazis but will defend to the death their right to be heard (and potentially monetised)

Liberalism’s last laugh - New Statesman

Shklar’s powerful notion of “putting cruelty first” is at the heart of cancel culture. Or as the American philosopher Richard Rorty parsed Shklar’s phrase: “Liberals are the people who think that cruelty is the worst thing we do.” Rorty himself attempted to appropriate irony for humane purposes by formulating the concept of “liberal irony”, in which people are aware of the moral tension between shifting personal and historical circumstances and a universal commitment to humanity. It’s a uselessly beautiful idea. But it’s definitely not funny.

13 References

Footnotes

I am increasingly sympathetic to the idea that most arguments that I find myself in, in the public sphere, are unresolvable. The reason is this: If any given argument were resolvable it would likely have resolved itself by the time I got there, so it would follow that I would be unlikely to find myself in it, wandering randomly around on the internet. The arguments that dominate are ones that have sufficient memetic force to keep everyone involved perpetually stoking the flames so that they are still hot by the time I arrive.↩︎

Blacklist is denylisted.↩︎