Probabilistic programming

Doing statistics using the tools of computer science

October 2, 2019 — February 11, 2022

Probabilistic programming languages (PPLs). A probabilistic programming system is a system for specifying stochastic generative models, and reasoning about them. Or, as Fabiana Clemente puts it

Probabilistic programming is about doing statistics using the tools of computer science.

The program represents our random generative model, and conditioning upon the observed data gives us updated distributions over parameters, or prediction, or whatever. TYpically this will automatically account for the graphical model structure.

By convention, when I say ‘probabilistic programming’ rather than merely inference, I am understood to be implying a certain ambition; I am expressing hope that my technique might succeed in doing inference for complicated models, possibly ones without tractable likelihoods of any kind, maybe even Turing-complete models. Usually I would not say that I was using a PPL for a garden-variety hierarchical model, because that is not ambitious enough. That more simple usage is also permitted, although it might be regarded as an up-sell. Hope in this context means something like “we have the programming primitives to, in principle, possibly approximately, express the awful crazy likelihood structure of a complicated problem, and to do something that looks like it might estimate the correct conditional density, but also for any given problem we are on our own in demonstrating that it actually does solve the desired problem to the desired accuracy in the desired time.”

Many of these tools use Markov Chain Monte Carlo sampling, which turns out to be a startlingly general way to grind out estimates of the necessary conditional probability distributions, especially if we don’t think about convergence rates too hard. Some frameworks enable other methods, such as classic conjugate priors for easy (sub-)models, variational methods of all stripes, including reparameterisation flows, and many hybrids of all of the above.

See George Ho of PyMC3/PyMC4 for an in-depth introduction into what might be desirable to solve these problems in practice, to wit,

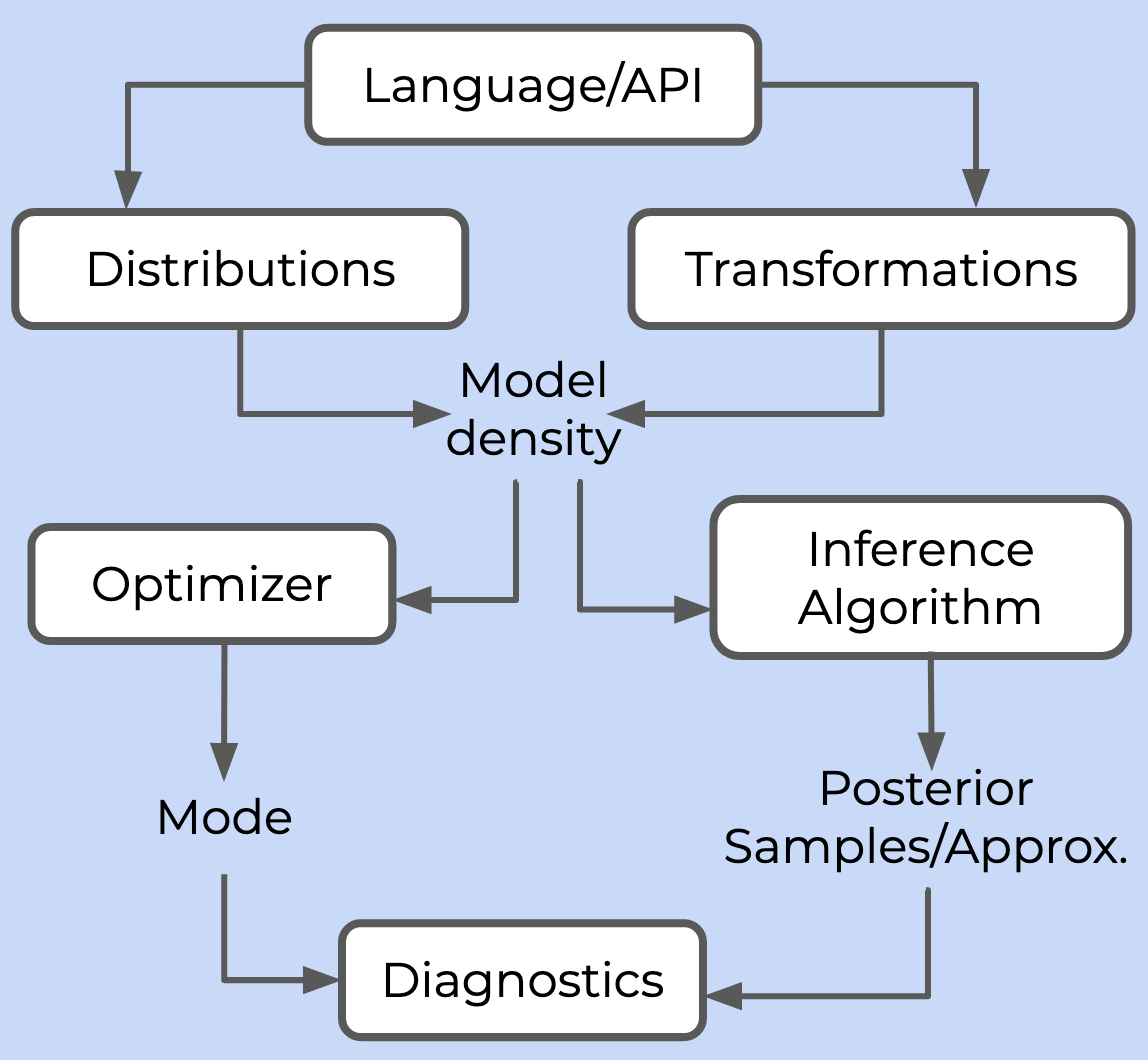

A probabilistic programming framework needs to provide six things:

- A language or API for users to specify a model

- A library of probability distributions and transformations to build the posterior density

- At least one inference algorithm, which either draws samples from the posterior (in the case of Markov Chain Monte Carlo, MCMC) or computes some approximation of it (in the case of variational inference, VI)

- At least one optimizer, which can compute the mode of the posterior density

- An autodifferentiation library to compute gradients required by the inference algorithm and optimizer

- A suite of diagnostics to monitor and analyze the quality of inference

See also Col Carroll’s overview of several trendy frameworks. This includes some I did not include here due to exhaustion and choice paralysis. Check the dates on all advice in this area; as a hip research topic, there is a constant flux of new frameworks into and out of use.

1 Tutorials and textbooks

With Tom Blau, I recently wrote a tutorial introduction to pyro, csiro-mlai/hackfest-ppl

Rob Salomone’s course is excellent and starts with great examples.

Jan-Willem van de Meent, Brooks Paige, Hongseok Yang, Frank Wood, An Introduction to Probabilistic Programming

Richard McElreath, Statistical Rethinking has gone viral as an introduction to some of this stuff. It is available on O’Reilly (free for CSIRO people). There is a PyMC3 and a numpyro version.

Kevin Murphy’s probml/pml-book: Probabilistic Machine Learning

Wi Ji Ma, Konrad Paul Kording, Daniel Goldreich, Bayesian models of perception and action

Noah D. Goodman, Joshua B. Tenenbaum et al, Probabilistic Models of Cognition - 2nd Edition

Noah D. Goodman and Andreas Stuhlmüller, The Design and Implementation of Probabilistic Programming Languages

Barber (2012)

Bishop (2006)

1.1 MCMC considerations

Maybe see MCMC for now.

1.2 Variation inference considerations

Maybe see variational inference for now.

2 Toolkits

There are too many PPLs. Some of these I have actually played with and they are documented below.

2.1 Pyro

Pyro is my main daily workhorse tool at the moment, so it now gets its own notebook.

pytorch + bayes = pyro. (Pradhan et al. 2018)

2.2 Stan

Stan is the inference toolbox for broad classes of Bayesian model and the de facto reference point. If your problem CAN be handled by Stan, this is a highly recommended option. Often seen in concert with brms which makes it easier to use for various standard regression models.

Stan breaks down in certain circumstances. It does not naturally express neural-network models well, and indeed we have reason to be concerned that the posterior simulations will be nasty with very high dimensional parameter vectors

Stan does support some variational inference, although last time I checked (2017) it was insufficiently flexible todo anything useful for me and not recommended.

See the Stan notebook.

2.3 Forneylab.jl

Seems to have gone very hardcore on variational message passing (van de Laar et al. 2018; Akbayrak, Bocharov, and de Vries 2021).

2.4 Turing.jl

Turing.jlis a Julia library for (universal) probabilistic programming. Current features include:

- Universal probabilistic programming with an intuitive modelling interface

- Hamiltonian Monte Carlo (HMC) sampling for differentiable posterior distributions

- Particle MCMC sampling for complex posterior distributions involving discrete variables and stochastic control flows

- Gibbs sampling that combines particle MCMC and HMC

It is one of many julia options, and includes MCMC toolkit AdvancedHMC.jl.

2.5 probflow

2.6 Gen

Gen:

Gensimplifies the use of probabilistic modeling and inference, by providing modeling languages in which users express models, and high-level programming constructs that automate aspects of inference.Like some probabilistic programming research languages, Gen includes universal modeling languages that can represent any model, including models with stochastic structure, discrete and continuous random variables, and simulators. However, Gen is distinguished by the flexibility that it affords to users for customizing their inference algorithm.

Gen’s flexible modeling and inference programming capabilities unify symbolic, neural, probabilistic, and simulation-based approaches to modeling and inference, including causal modeling, symbolic programming, deep learning, hierarchical Bayesiam modeling, graphics and physics engines, and planning and reinforcement learning.

It has an impressive talk demonstrating how you would interactively clean data using it.

2.7 Edward/Edward2

From Blei’s lab, leverages trendy deep learning machinery, tensorflow for variational Bayes and such.

This is now baked in to tensorflow as a probabilistic programming interface. I do not use it because I do not trust Tensorflow.

2.8 TensorFlow Probability

Another Tensorflow entrant. Low-level and messy. Used in Edward2, above, but presumably more basic. The precise relationships between these tensorflow things is complicated enough that it is a whole other research project to pick it apart.

2.9 pyprob

pyprob: (Le, Baydin, and Wood 2017)

pyprob is a PyTorch-based library for probabilistic programming and inference compilation. The main focus of this library is on coupling existing simulation codebases with probabilistic inference with minimal intervention.

The main advantage of pyprob, compared against other probabilistic programming languages like Pyro, is a fully automatic amortized inference procedure based on importance sampling. pyprob only requires a generative model to be specified. Particularly, pyprob allows for efficient inference using inference compilation which trains a recurrent neural network as a proposal network.

In Pyro such an inference network requires the user to explicitly define the control flow of the network, which is due to Pyro running the inference network and generative model sequentially. However, in pyprob the generative model and inference network runs concurrently. Thus, the control flow of the model is directly used to train the inference network. This alleviates the need for manually defining its control flow.

The flagship application seems to be etalumis (Baydin et al. 2019) a framework with emphasis AFAICT on Bayesian inverse problems.

2.10 PyMC3

The PyMC family creates many probabilistic programming ideas and blogposts and also code, and has been doing so since the mid 2000s. They seem an excellent destination to learn about probabilistic programming, although not the best place to find stable, finished products, even by the mercurial standards of this field.

PyMC3 is python+Theano, although they have ported theano to jax and renamed it Aesara. They claim this is fast and it might be an easy way to access jax-accelerated sampling if Numpyro feels too exhausting. 1

See Chris Fonnesbeck’s example in python.

Thomas Wiecki, Bayesian Deep Learning demonstrates some variants with PyMC3.

2.11 Mamba.jl

Mamba is an open platform for the implementation and application of MCMC methods to perform Bayesian analysis in julia. The package provides a framework for (1) specification of hierarchical models through stated relationships between data, parameters, and statistical distributions; (2) block-updating of parameters with samplers provided, defined by the user, or available from other packages; (3) execution of sampling schemes; and (4) posterior inference. It is intended to give users access to all levels of the design and implementation of MCMC simulators to particularly aid in the development of new methods.

Several software options are available for MCMC sampling of Bayesian models. Individuals who are primarily interested in data analysis, unconcerned with the details of MCMC, and have models that can be fit in JAGS, Stan, or OpenBUGS are encouraged to use those programs. Mamba is intended for individuals who wish to have access to lower-level MCMC tools, are knowledgeable of MCMC methodologies, and have experience, or wish to gain experience, with their application. The package also provides stand-alone convergence diagnostics and posterior inference tools, which are essential for the analysis of MCMC output regardless of the software used to generate it.

2.12 Greta

greta models are written right in R, so there’s no need to learn another language like BUGS or Stan

greta uses Google TensorFlow

I wonder how it uses Google Tensorflow.

2.13 Soss.jl

Soss is a library for probabilistic programming.

Let’s jump right in with a simple linear model:

using Soss

m = @model X begin

β ~ Normal() |> iid(size(X,2))

y ~ For(eachrow(X)) do x

Normal(x’ * β, 1)

end

end;In Soss, models are first-class and function-like, and “applying” a model to its arguments gives a joint distribution.

Just a few of the things we can do in Soss:

- Sample from the (forward) model

- Condition a joint distribution on a subset of parameters

- Have arbitrary Julia values (yes, even other models) as inputs or outputs of a model

- Build a new model for the predictive distribution, for assigning parameters to particular values

How does it do all these things exactly?

2.14 Miscellaneous julia options

DynamicHMC.jl does Hamiltonian/NUTS sampling in a raw likelihood setting.

Miletus is a financial product and term-structure modeling package that is available for quant stuff in Julia as part of the paid packages offerings in finance. Although it looks like it is also freely available?

2.15 Inferpy

InferPy seems to be a higher-level competitor to Edward2?

2.16 Zhusuan

ZhuSuan is a python probabilistic programming library for Bayesian deep learning, which conjoins the complimentary advantages of Bayesian methods and deep learning. ZhuSuan is built upon Tensorflow. Unlike existing deep learning libraries, which are mainly designed for deterministic neural networks and supervised tasks, ZhuSuan provides deep learning style primitives and algorithms for building probabilistic models and applying Bayesian inference. The supported inference algorithms include:

- Variational inference with programmable variational posteriors, various objectives and advanced gradient estimators (SGVB, REINFORCE, VIMCO, etc).

- Importance sampling for learning and evaluating models, with programmable proposals.

- Hamiltonian Monte Carlo (HMC) with parallel chains, and optional automatic parameter tuning.

2.17 Church/Anglican

Church is a general-purpose Turing-complete Monte Carlo lisp-derivative, which is unbearably slow but does some reputedly cute tricks with modeling human problem-solving, and other likelihood-free methods, according to creators Noah Goodman and Joshua Tenenbaum.

See also Anglican, which is the same but different, being built in clojure, and hence also leveraging browser Clojurescript.

2.18 WebPPL

WebPPL is a successor to Church designed as a teaching language for probabilistic reasoning in the browser. If you like Javascript ML.

2.19 BAT

See also BAT the Bayesian Analysis Toolkit, which does sophisticated Bayes modelling although AFAICT uses a fairly basic Metropolis-Hastings Sampler?

3 Incoming

ArviZ is a Python package for exploratory analysis of Bayesian models. Includes functions for posterior analysis, data storage, sample diagnostics, model checking, and comparison.

The goal is to provide backend-agnostic tools for diagnostics and visualizations of Bayesian inference in Python, by first converting inference data into xarray objects. See here for more on xarray and ArviZ usage and here for more on

InferenceDatastructure and specification.

4 References

Footnotes

Beware: PyMC4, despite what you might think due to the jetsam of an earlier hype cycle, is discontinued in favour of PyMC3. AFAICT PyMC4 was intended to be a tensorflow-backed system, so this is some additional evidence that Tensorflow blighs every probabilistic programming system it touches.↩︎